Share your love

Install Apache Kafka on Debian 11

In this guide, we want to teach you to Install and Configure Apache Kafka on Debian 11.

Apache Kafka is an open-source distributed publish-subscribe messaging platform that has been purpose-built to handle real-time streaming data for distributed streaming, pipelining, and replay of data feeds for fast, scalable operations.

Kafka is a broker-based solution that operates by maintaining streams of data as records within a cluster of servers. Kafka servers can span multiple data centers and provide data persistence by storing streams of records (messages) across multiple server instances in topics. A topic stores records or messages as a series of tuples, a sequence of immutable Python objects, which consist of a key, a value, and a timestamp.

Steps To Install and Configure Apache Kafka on Debian 11

To complete this guide, you must log in to your server as a non-root user with sudo privileges and set up a basic firewall. To do this, you can follow our guide on Initial Server Setup with Debian 11.

Install Required Packages For Kafka

First, you need to prepare your server for installing Kafka. Update and upgrade your local package index with the command below:

sudo apt update && sudo apt upgradeThen, use the command below to install the required packages, JRE, and JDK on Debian 11:

sudo apt install default-jre wget git unzip default-jdk -ySet up Apache Kafka on Debian 11

At this point, you need to download and get the latest release of Kafka.

Download Kafka Debian

Visit the Apache Kafka downloads page and look for the Latest release and get the sources under Binary downloads. Get the one that is recommended by Kafka with the wget command:

sudo wget https://downloads.apache.org/kafka/3.3.2/kafka_2.13-3.3.2.tgzThen, make a directory for your Kafka under /usr/local directory and switch to it with the following commands:

sudo mkdir /usr/local/kafka-server && sudo cd /usr/local/kafka-serverNext, extract your downloaded file in this directory:

sudo tar -xvzf ~/kafka_2.13-3.3.2.tgz --strip 1Create Zookeeper Systemd Unit File

At this point, you need to create a Zookeeper systemd unit file for helping in performing common service actions such as starting, stopping, and restarting Kafka.

Zookeeper is a top-level software developed by Apache that acts as a centralized service and is used to maintain naming and configuration data and to provide flexible and robust synchronization within distributed systems. Zookeeper keeps track of the status of the Kafka cluster nodes and it also keeps track of Kafka topics, partitions, etc.

To create the zookeeper systemd unit file, you can use your favorite text editor, here we use vi editor:

sudo vi /etc/systemd/system/zookeeper.serviceAdd the following content to the file:

[Unit]

Description=Apache Zookeeper Server

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

ExecStart=/usr/local/kafka-server/bin/zookeeper-server-start.sh /usr/local/kafka-server/config/zookeeper.properties

ExecStop=/usr/local/kafka-server/bin/zookeeper-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.targetWhen you are done, save and close the file.

Create Systemd Unit File for Kafka

Now you need to create a systemd unit file for Apache Kafka on Debian 11. To do this, use your favorite text editor, here we use vi:

sudo vi /etc/systemd/system/kafka.serviceAdd the following content to the file:

Note: Make sure your JAVA_HOME configs are well inputted or Kafka will not start.

[Unit]

Description=Apache Kafka Server

Documentation=http://kafka.apache.org/documentation.html

Requires=zookeeper.service

After=zookeeper.service

[Service]

Type=simple

Environment="JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64"

ExecStart=/usr/local/kafka-server/bin/kafka-server-start.sh /usr/local/kafka-server/config/server.properties

ExecStop=/usr/local/kafka-server/bin/kafka-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.targetWhen you are done, save and close the file.

At this point, you need to reload the systemd daemon to apply changes and then start the services by using the commands below:

# sudo systemctl daemon-reload

# sudo systemctl enable --now zookeeper

# sudo systemctl enable --now kafkaVerify your Kafka and Zookeeper services are active and running on Debian 11:

# sudo systemctl status kafkaOutput

● kafka.service - Apache Kafka Server

Loaded: loaded (/etc/systemd/system/kafka.service; enabled; vendor preset:>

Active: active (running) since Mon 2023-01-30 04:30:51 EST; 12s ago

Docs: http://kafka.apache.org/documentation.html

Main PID: 5850 (java)

Tasks: 69 (limit: 4679)

Memory: 328.2M

CPU: 7.525s

CGroup: /system.slice/kafka.service

└─5850 /usr/lib/jvm/java-11-openjdk-amd64/bin/java -Xmx1G -Xms1G -

...# sudo systemctl status zookeeperOutput

● zookeeper.service - Apache Zookeeper Server

Loaded: loaded (/etc/systemd/system/zookeeper.service; enabled; vendor pre>

Active: active (running) since Mon 2023-01-30 04:30:45 EST; 1min 12s ago

Main PID: 5473 (java)

Tasks: 32 (limit: 4679)

Memory: 72.6M

CPU: 2.811s

CGroup: /system.slice/zookeeper.service

└─5473 java -Xmx512M -Xms512M -server -XX:+UseG1GC -XX:MaxGCPauseM>

...Install CMAK on Debian 11

CMAK (previously known as Kafka Manager) is an open-source tool for managing Apache Kafka clusters developed by Yahoo. At this point, you need to clone the CMAK from GitHub by using the command below:

# cd ~

# sudo git clone https://github.com/yahoo/CMAK.gitOutput

Cloning into 'CMAK'...

remote: Enumerating objects: 6542, done.

remote: Counting objects: 100% (266/266), done.

remote: Compressing objects: 100% (142/142), done.

remote: Total 6542 (delta 150), reused 187 (delta 112), pack-reused 6276

Receiving objects: 100% (6542/6542), 3.97 MiB | 7.55 MiB/s, done.

Resolving deltas: 100% (4211/4211), done.

Configure Cluster Manager for Kafka

At this point, you need to make some configuration changes in the CMAK config file. Open the file with your favorite text editor, here we use vi:

sudo vi ~/CMAK/conf/application.confChange cmak.zkhosts=”my.zookeeper.host.com:2181″ and you can also specify multiple zookeeper hosts by comma delimiting them, like so: cmak.zkhosts=”my.zookeeper.host.com:2181,other.zookeeper.host.com:2181“. The host names can be ip addresses too.

cmak.zkhosts="localhost:2181"When you are done, save and close the file.

At his point, you need to create a zip file that can be used to deploy the application. You should see a lot of output on your terminal as files are downloaded and compiled. This will take some time to complete.

# cd ~/CMAK/

# ./sbt clean distWhen it is completed, you will get the following output:

Output

[info] Your package is ready in /root/CMAK/target/universal/cmak-3.0.0.7.zipChange into the directory where the zip file is located and unzip it by using the commands below:

# cd /root/CMAK/target/universal

# unzip cmak-3.0.0.7.zip

# cd cmak-3.0.0.7Access CMAK Service

When are finished with the previous step, you can run the Cluster Manager for Apache Kafka service on Debian 11 by using the command below:

bin/cmakBy default, it will choose port 9000, so open your favorite browser and point it to http://ip-or-domain-name-of-server:9000. In case your firewall is running, kindly allow the port to be accessed externally:

sudo ufw allow 9000You should see the following interface:

Add Cluster From the CMAK

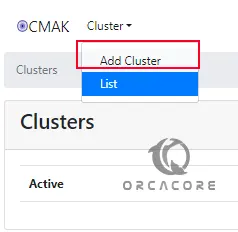

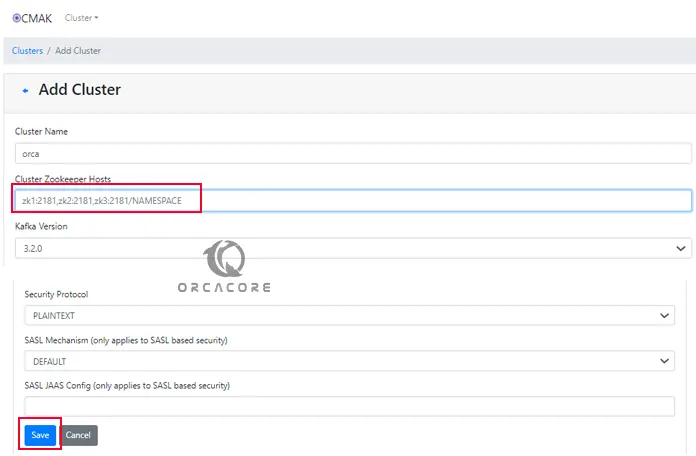

From there, you can easily add the clusters. To do this, click cluster, and add cluster.

You will be presented with a page as shown below. Fill in the form with the details being requested (Cluster Name, Zookeeper Hosts, etc). In case you have several Zookeeper Hosts, add them delimited by a comma. You can fill in the other details depending on your needs.

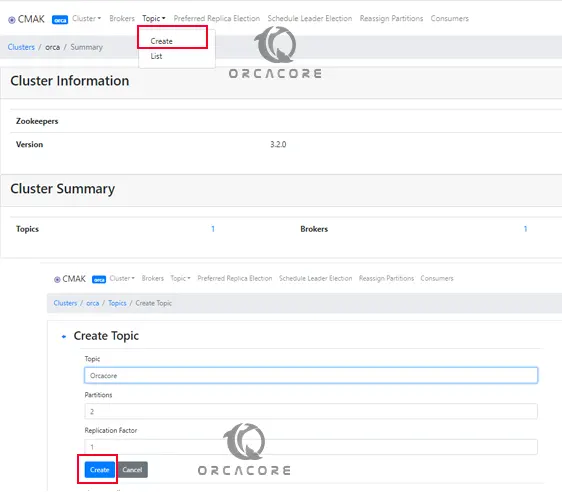

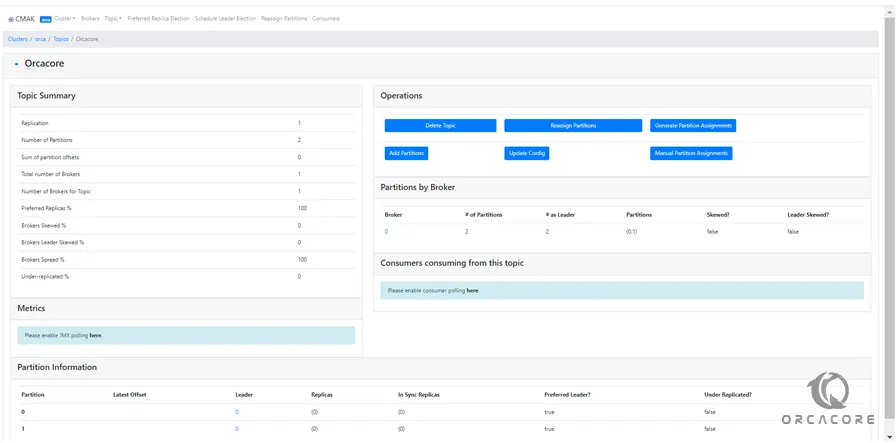

Create a Topic in the CMAK interface

From your newly added cluster, you can click on Topic, and create. You will be required to input all the details you need about the new Topic (Replication Factor, Partitions, and others). Fill in the form then click “Create”.

Then, click on Cluster view to see your topics.

From there you can add topics, delete them, config them and etc.

Conclusion

At this point, you have learned to Install and Configure Apache Kafka on Debian 11.

Hope you enjoy it. You may be like these articles:

Install Samba Share on Debian 11